Sunday October 12, 2025

Weekend database upgrade & maintenance.

Sunday October 12, 2025

Weekend database upgrade & maintenance.

Login failures lock users out of your app. From forgotten passwords to broken authentication flows, a bad login experience is a nightmare for both users and support teams.

While a simple login screen seems easy to test, complexity grows fast when you introduce two-factor authentication (2FA), magic links, SSO, or team-based accounts. Can your tests keep up?

In this guide, we’ll show you how to automate 2FA email verification using Selenium and Mailsac. You’ll send a real email, extract the one-time password (OTP) programmatically, and validate authentication—ensuring your app’s login works flawlessly.

You’re already familiar with Selenium—after all, it’s a core tool for browser automation. (Fun fact: we’re a proud Selenium sponsor!)

Have you worked directly with WebDriver? WebDriver is what allows Selenium to control a browser just like a real user—clicking buttons, filling out forms, and navigating pages. For our automated 2FA test, we’ll use WebDriver to interact with the login flow, retrieve the OTP from an email, and complete authentication.

Let’s start by installing Selenium via npm:

npm init

npm install selenium-webdriver chromedriver dotenv

You’ll also need to install chromedriver by manually downloading it from Google or from your package manager. For example for macOS:

brew install chromedriver

Mailsac provides disposable email addresses and an API to fetch incoming emails. We’ll use it to verify that a 2FA email is received and contains the correct code.

Why Use Mailsac?

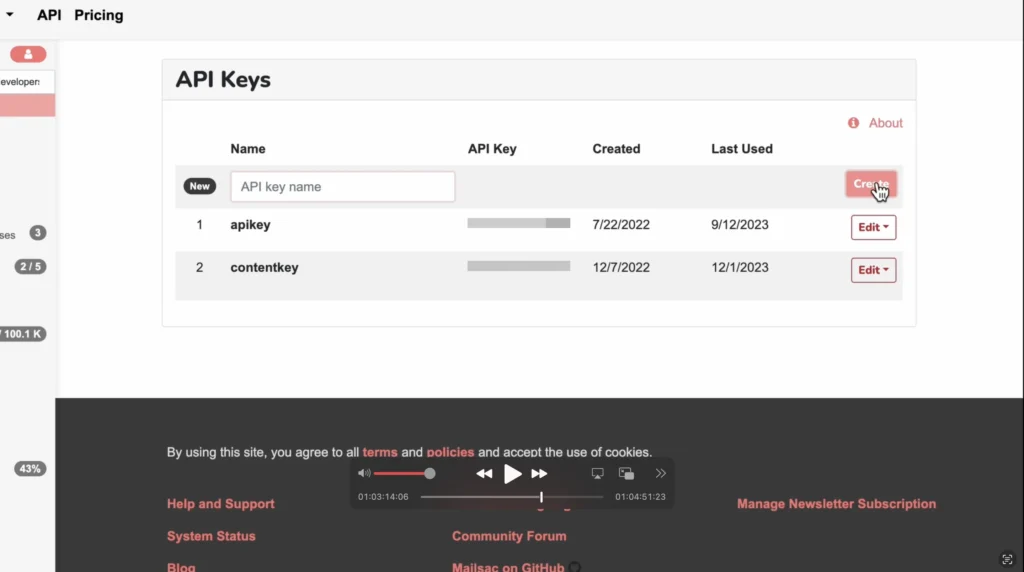

Setting Up Mailsac API Access

1. Create a Free Mailsac Account:

2. Install Mailsac’s API Client

Install the official mailsac API client:

npm install @mailsac/api dotenv

3. Store Your API Key Securely

Never hardcode your API key. Use environment variables by creating a .env file:

MAILSAC_API_KEY=your-api-key-here

Now that we’ve installed Selenium and set up our Mailsac account, we can start writing our test script to automatically log in, retrieve the 2FA code, and verify it in our application.

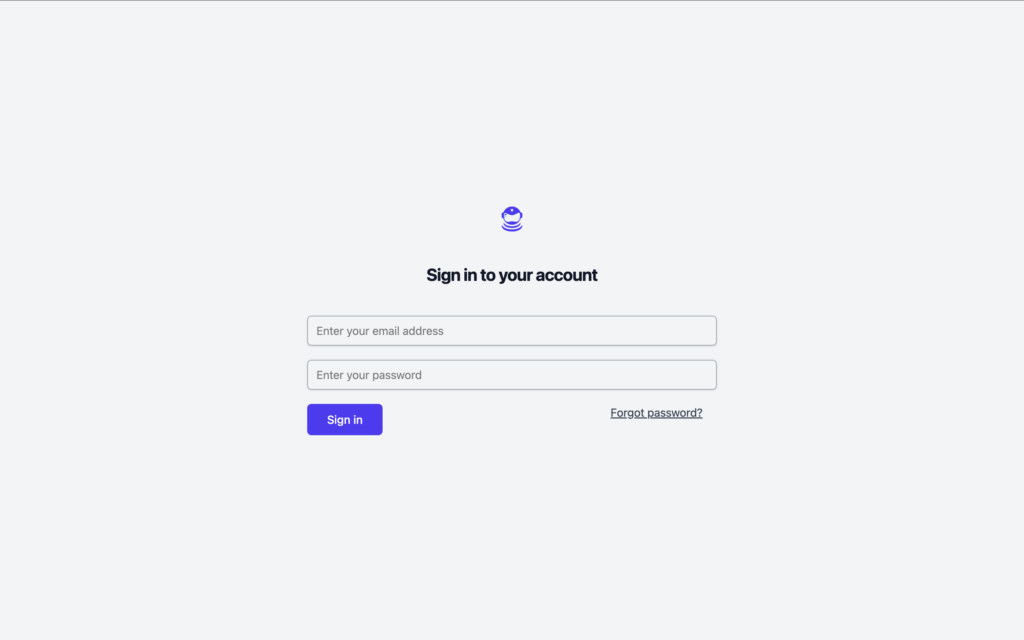

Let’s introduce our sample application that we’re going to test, called “Active Forums”.

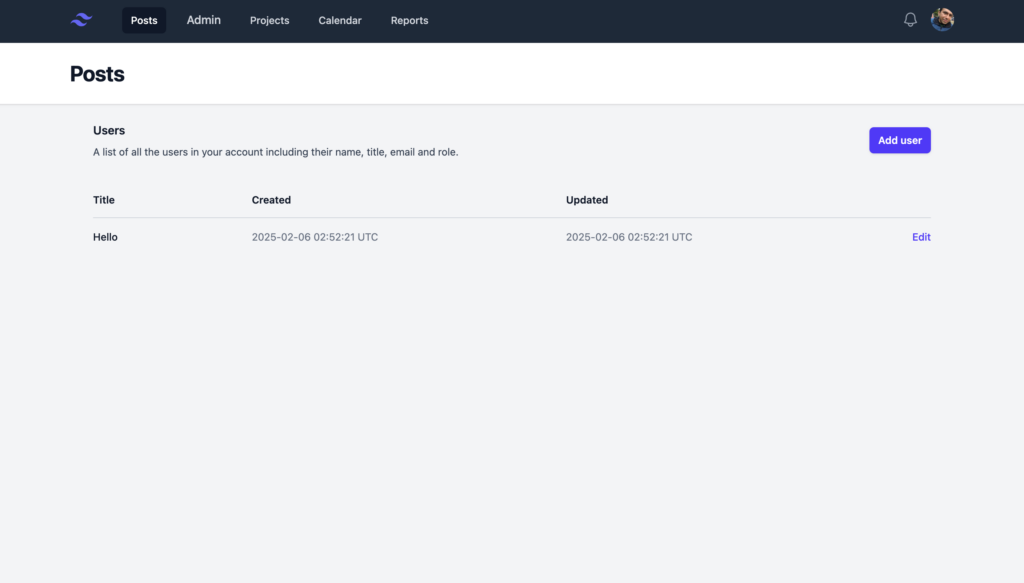

It’s an early version of a forum application. It’s got the basic logic of Sign In, the landing page that shows you all your posts, and the Admin Panel

Let’s automate the process of logging in, retrieving the 2FA code, and verifying it.

Our first task is to navigate to the login page, enter the username and password, and submit the form.

1.1 Initialize Selenium WebDriver

const { Builder, By, until } = require('selenium-webdriver');

require('dotenv').config();

const { Mailsac } = require('@mailsac/api');

async function login(emailAddress,accountPassword) {

let driver = await new Builder().forBrowser('chrome').build();

try {

await driver.get("http://localhost:3000/users/sign_in");

await driver.findElement(By.id("email")).sendKeys(emailAddress);

await driver.findElement(By.id("password")).sendKeys(accountPassword);

await driver.findElement(By.id("sign-in")).click();

} catch (error) {

console.error("Login test failed:", error);

}

return driver;

}

Here we:

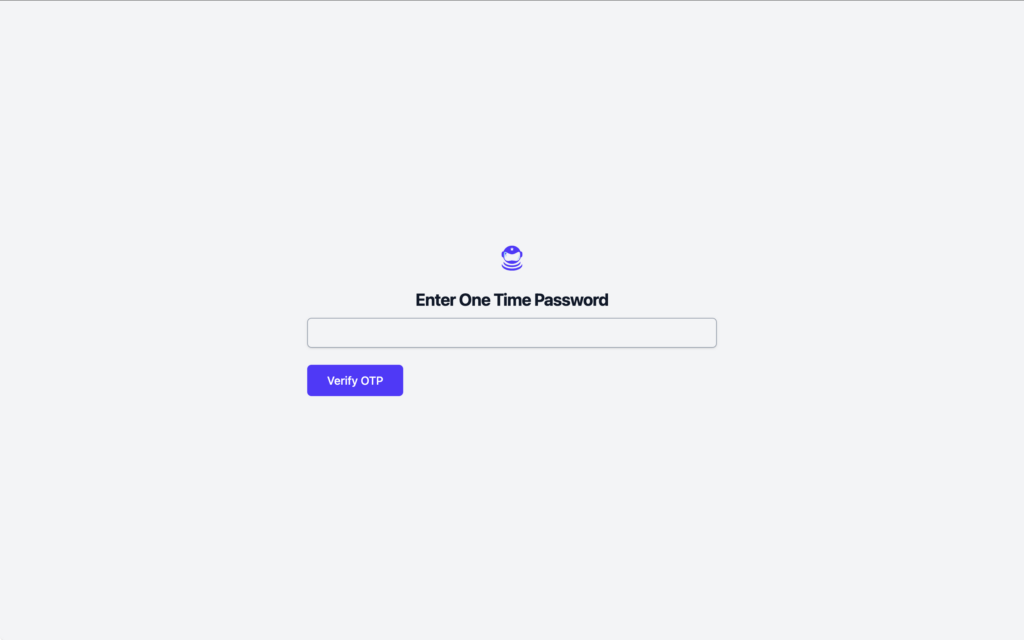

Once the user is on the 2FA screen, we need to fetch the one-time code from the email inbox.

Load the API in the script:

require('dotenv').config();

const { Mailsac } = require('@mailsac/api');

Now, we write a function to check the inbox and extract the 6-digit code from the email.

async function get2FACode(emailAddress) {

try {

console.log(`Checking inbox for: ${emailAddress}`);

// Fetch the latest messages from the inbox

const mailsac = new Mailsac({ headers: { "Mailsac-Key": process.env.MAILSAC_API_KEY } });

const results = await mailsac.messages.listMessages(emailAddress);

const messages = results.data;

if (!messages.length) {

console.log("No 2FA email received yet.");

return null;

}

// Get the latest message ID

const latestMessageId = messages[0]._id;

// Fetch email body

// const emailBody = await mailsac.messages.getMessage(latestMessageId);

const emailBody = await mailsac.messages.getBodyPlainText(emailAddress,latestMessageId,{ download : true });

// Extract 6-digit 2FA code using regex

const match = emailBody.data.match(/\b\d{6}\b/);

if (match) {

console.log(`2FA Code found: ${match[0]}`);

return match[0];

} else {

console.log("No 2FA code found in email.");

return null;

}

} catch (error) {

console.error("Error retrieving 2FA email:", error);

}

}

What This Does:

Now that we have the 2FA code, we return to Selenium to enter it into the form.

async function enter2FACode(driver, emailAddress) {

let attempts = 0;

let otpCode = null;

// Polling for 2FA code with a maximum of 5 attempts

while (!otpCode && attempts < 5) {

otpCode = await get2FACode(emailAddress);

if (!otpCode) {

console.log("Waiting for 2FA code...");

await new Promise(resolve => setTimeout(resolve, 5000)); // Wait 5 seconds before retrying

attempts++;

}

}

if (!otpCode) {

console.error("Failed to retrieve 2FA code after multiple attempts.");

return;

}

try {

// Enter the 2FA code into the form

await driver.findElement(By.id("otp_code")).sendKeys(otpCode);

// Submit the form

await driver.findElement(By.name("commit")).click();

console.log("2FA code submitted, verifying login...");

// Wait for successful login redirect

await driver.wait(until.urlContains("/"), 10000);

console.log("Login successful!");

} catch (error) {

console.error("2FA verification failed:", error);

}

}

What This Does:

Now, we combine everything into a single test flow.

(async function testLogin2FA() {

const testEmail = "[email protected]";

const testCreds = "password123";

// Step 1: Log in

const driver = await login(testEmail,testCreds);

// Step 2: Retrieve and enter 2FA code

if (driver) {

await enter2FACode(driver, testEmail);

await driver.quit();

}

})();

main.js FileHere’s what our full file now looks like:

const { Builder, By, until } = require('selenium-webdriver');

require('dotenv').config();

const { Mailsac } = require('@mailsac/api');

async function login(emailAddress,accountPassword) {

let driver = await new Builder().forBrowser('chrome').build();

try {

await driver.get("http://localhost:3000/users/sign_in");

await driver.findElement(By.id("email")).sendKeys(emailAddress);

await driver.findElement(By.id("password")).sendKeys(accountPassword);

await driver.findElement(By.id("sign-in")).click();

} catch (error) {

console.error("Login test failed:", error);

}

return driver;

}

async function get2FACode(emailAddress) {

try {

console.log(`Checking inbox for: ${emailAddress}`);

// Fetch the latest messages from the inbox

const mailsac = new Mailsac({ headers: { "Mailsac-Key": process.env.MAILSAC_API_KEY } });

const results = await mailsac.messages.listMessages(emailAddress);

const messages = results.data;

if (!messages.length) {

console.log("No 2FA email received yet.");

return null;

}

// Get the latest message ID

const latestMessageId = messages[0]._id;

// Fetch email body

// const emailBody = await mailsac.messages.getMessage(latestMessageId);

const emailBody = await mailsac.messages.getBodyPlainText(emailAddress,latestMessageId,{ download : true });

// Extract 6-digit 2FA code using regex

const match = emailBody.data.match(/\b\d{6}\b/);

if (match) {

console.log(`2FA Code found: ${match[0]}`);

return match[0];

} else {

console.log("No 2FA code found in email.");

return null;

}

} catch (error) {

console.error("Error retrieving 2FA email:", error);

}

}

async function enter2FACode(driver, emailAddress) {

let attempts = 0;

let otpCode = null;

// Polling for 2FA code with a maximum of 5 attempts

while (!otpCode && attempts < 5) {

otpCode = await get2FACode(emailAddress);

if (!otpCode) {

console.log("Waiting for 2FA code...");

await new Promise(resolve => setTimeout(resolve, 5000)); // Wait 5 seconds before retrying

attempts++;

}

}

if (!otpCode) {

console.error("Failed to retrieve 2FA code after multiple attempts.");

return;

}

try {

// Enter the 2FA code into the form

await driver.findElement(By.id("otp_code")).sendKeys(otpCode);

// Submit the form

await driver.findElement(By.name("commit")).click();

console.log("2FA code submitted, verifying login...");

// Wait for successful login redirect

await driver.wait(until.urlContains("/"), 10000);

console.log("Login successful!");

} catch (error) {

console.error("2FA verification failed:", error);

}

}

// Main Test Logic

(async function testLogin2FA() {

const testEmail = "[email protected]";

const testCreds = "password123";

// Step 1: Log in

const driver = await login(testEmail,testCreds);

// Step 2: Retrieve and enter 2FA code

if (driver) {

await enter2FACode(driver, testEmail);

await driver.quit();

}

})();

Now try running the whole login test:

node main.js

And just like that we now have an automated way to test outbound emails, logins, one time passwords and correct landing page location!

Login authentication is a critical part of any web application, and failing to test it properly can lead to frustrated users and costly support issues. By combining Selenium for browser automation and Mailsac for email-based 2FA verification, we’ve built a fully automated test that ensures your app’s login process works smoothly.

With this setup, your testing team can:

This approach not only saves time but also improves the reliability of your authentication system. You can now integrate this script into your CI/CD pipeline to ensure every update maintains seamless login functionality.

Visit our forums if you have any questions. Now it’s your turn—try running the script, tweak it for your specific use case, and start automating your login tests today!

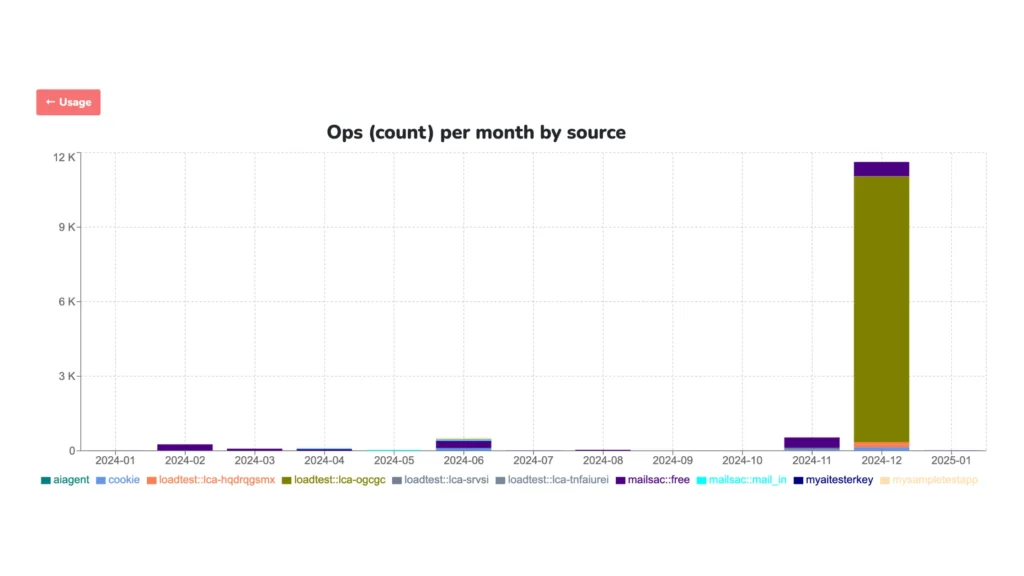

Email performance can make or break your app—especially during high-demand events like Black Friday sales, onboarding campaigns, or critical notifications. That’s why we’re excited to introduce our new Email Load Testing feature: a stress-testing tool designed to help you simulate real-world email loads quickly, efficiently, and affordably.

Now you can confidently validate your email systems without impacting production environments or breaking the bank. We’ll walk you through the essentials of email load testing and show you how to use a simple script to make the most of our new feature.

Traditional load testing often comes with challenges:

We specifically focused on solving these problems. By isolating your tests with unique, disposable subdomains, it makes sure that you have:

Setting up your first load test is quick and easy:

test-load.loadtester.msdc.co. Any email sent to this subdomain will be tracked in real time.This subdomain lets you simulate thousands of email deliveries without polluting your production environment.

Let’s walk through a load test. Here’s an example of how a customer might simulate email traffic using our load testing feature. This script, written in node with nodemailer, helps you generate and send thousands of emails to your test subdomain.

const nodemailer = require('nodemailer');

// SMTP Configuration

const transporter = nodemailer.createTransport({

host: process.env.SMTP_HOST,

port: process.env.SMTP_PORT,

auth: {

user: process.env.SMTP_USER,

pass: process.env.SMTP_PASSWORD,

},

});

// Generate Random Emails

const generateRandomEmail = () => {

const randomString = Math.random().toString(36).substring(2, 15);

return `test-${randomString}@test-load.loadtester.msdc.co`;

};

// Send Email Function

const sendEmail = async (index) => {

try {

const mailOptions = {

from: process.env.FROM_EMAIL,

to: generateRandomEmail(),

subject: `Test Email #${index}`,

text: `This is email number ${index}`,

};

await transporter.sendMail(mailOptions);

console.log(`Successfully sent email #${index}`);

} catch (error) {

console.error(`Failed to send email #${index}:`, error.message);

}

};

// Run Load Test

const runLoadTest = async () => {

const totalEmails = 500; // Number of emails to send

for (let i = 0; i < totalEmails; i++) {

await sendEmail(i);

}

};

runLoadTest();

test-load.loadtester.msdc.co).

Once your test is running, log into the Mailsac dashboard to monitor:

These insights help you pinpoint weaknesses in your email infrastructure, allowing you to make improvements before scaling up.

Our Email Load Testing is designed to help you stress-test your email systems with ease, but it’s only the beginning. Here are some ways to take your testing further:

Remember, Mailsac’s Email Load Testing is designed for manual or semi-automated testing scenarios—not for full CI/CD integration. This keeps the focus on controlled, actionable insights.

With Mailsac, you can confidently validate your email systems without unnecessary complexity or expense. Ready to see it in action? Sign up today and let’s make email testing smarter, faster, and more reliable.

We are excited to announce upgrades to the Mailsac Platform, recently deployed in November 2024.

Mailsac is the first QA Disposable Email Platform which allows Load Testing or Burn Testing of SMTP sender servers. You can safely send enormous amounts of test email to Mailsac after creating a Load Test Subdomain in the Mailsac Dashboard. When we receive these emails to a special subdomain, they are not subject to the (already high) throttling limits of the main platform. Note that emails are not saved/indexed as normal, so this is a feature specifically for testing your capacity to send high volume email campaigns.

More tutorials for Load Testing email will be coming soon.

We overhauled the backend systems for usage and analytics. You may have noticed sluggishness in the past on these features, but that should be gone for good. There’s also an updated user interface for debugging inbound mail and webhooks.

Today, we’re tackling a big question: With the rise of code generators and AI that can make UI elements based on a single drawing, will AI take your software testing job?

The short answer is no. Today we can safely tell you that no, AI won’t take your job. Instead, we think it will enhance the way you work. Let’s see how we can make AI a powerful ally for software testers.

We like to think of AI in the testing world’s context as a controlled chaos maker. We can simulate some parts of being human to introduce some unpredictability in our tests and see if our app mitigates against the chaos.

Instead of fighting AI, let’s embrace it. One of the ways we can do that is if we can have it write test cases for us, execute it, then tell us if it passed or not.

We can then easily move those generated tests to a framework like cypress or to a continuous integration environment like GitHub Actions.

So for the rest of the article, we’ll show you how you can have ai make a test case that:

To be safe and not risk sending any emails out to customers, we’ll use Mailsac and its API.

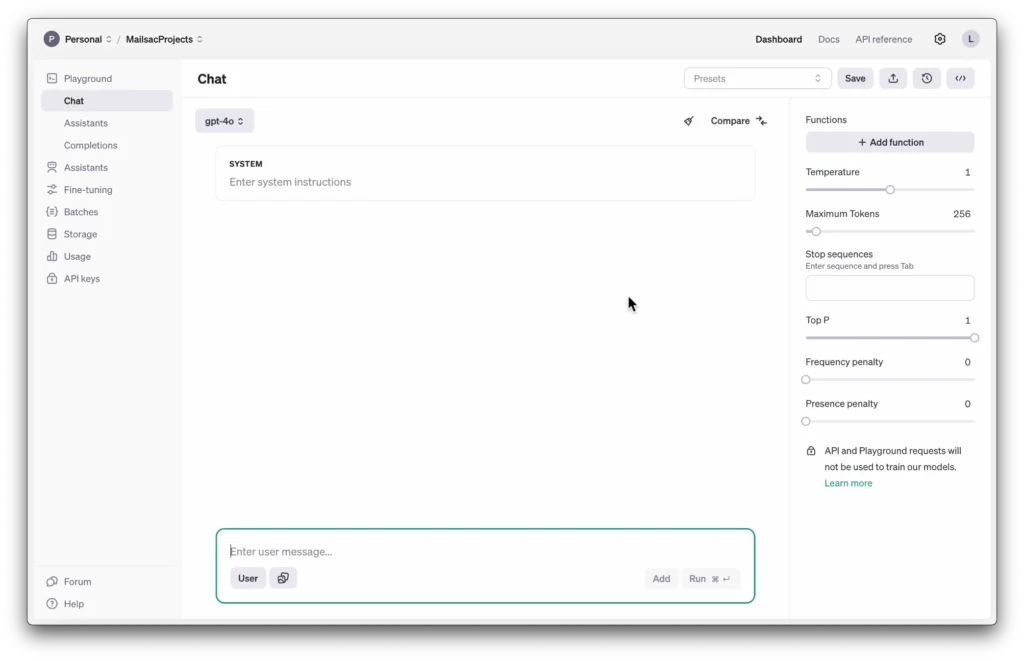

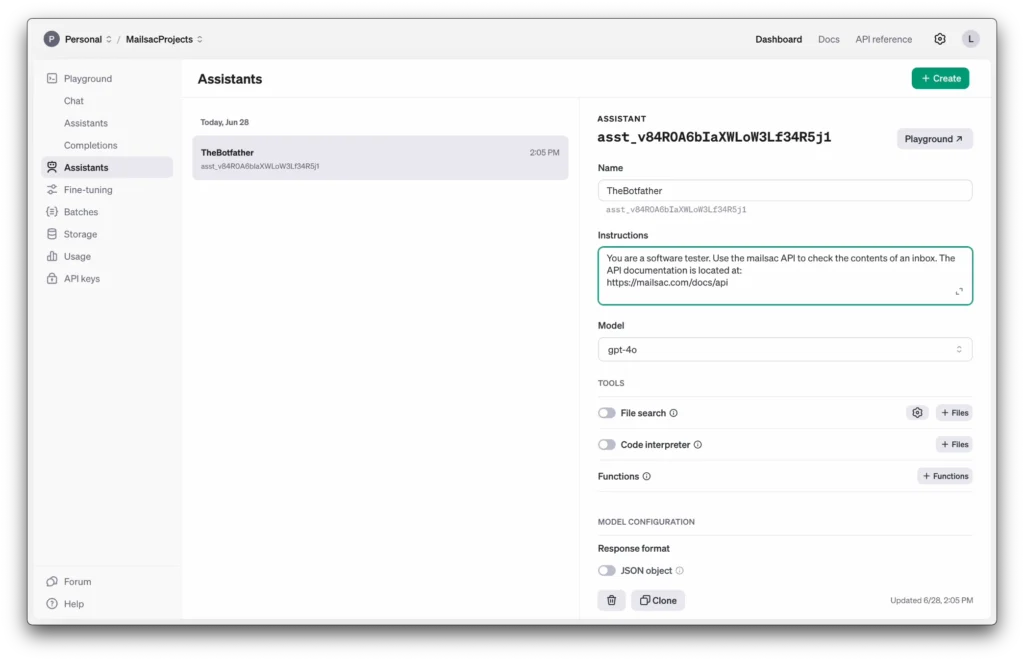

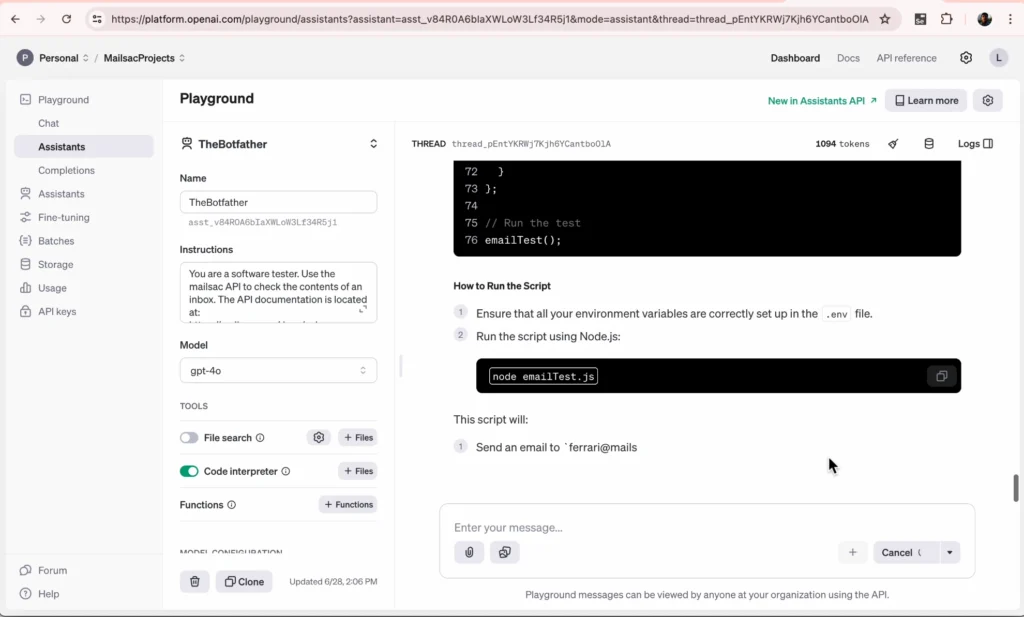

So for this walkthough we’re going to be using OpenAI’s “Assistant” feature. Assistants are a little bit more of a halfway point between the chat AI we all know, and an AI agent that is more autonomous.

We want to give this AI the ability to generate and run some code and read files, but not necessarily make any autonomous decisions.

For the instructions we’ll give it some system prompts to let it know under what context it should be operating under.

We’ll use the latest gpt-4o model and enable the code interpreter tools. That should allow it to read, run and execute some code for us.

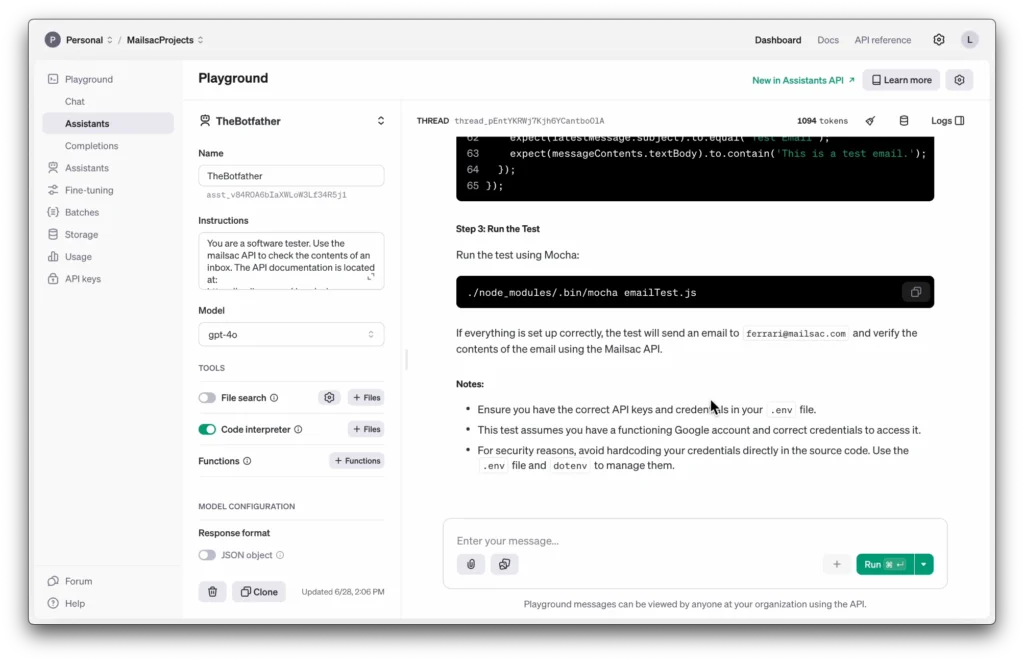

Now let’s go ahead and start generating our test cases.

We’ll use the prompt

You are creating a code test that will return true of false if a certain criteria passes. The language for > all tests should be in javascript. Use dotenv for your API keys.

The criteria is:

- Generate an email message and send it via nodemailer using google’s SMTP servers. Send it to [email protected].

- Wait 2 seconds for the email to send from Google then use the mailsac API to check the subject and

content of the email.- Make the test pass if it matches what was sent in 1 and fail if it doesn’t match.

Keep in mind that you can easily tweak the SMTP to use your own service. We’ll stick with Google’s as its the most accessible for this walkthrough.

Fire it off to the AI Assistant and let it generate the test case. In this run it generated a mocha test for me. In previous iterations it didn’t generate a whole mocha test so I’ll ask it to just use node.

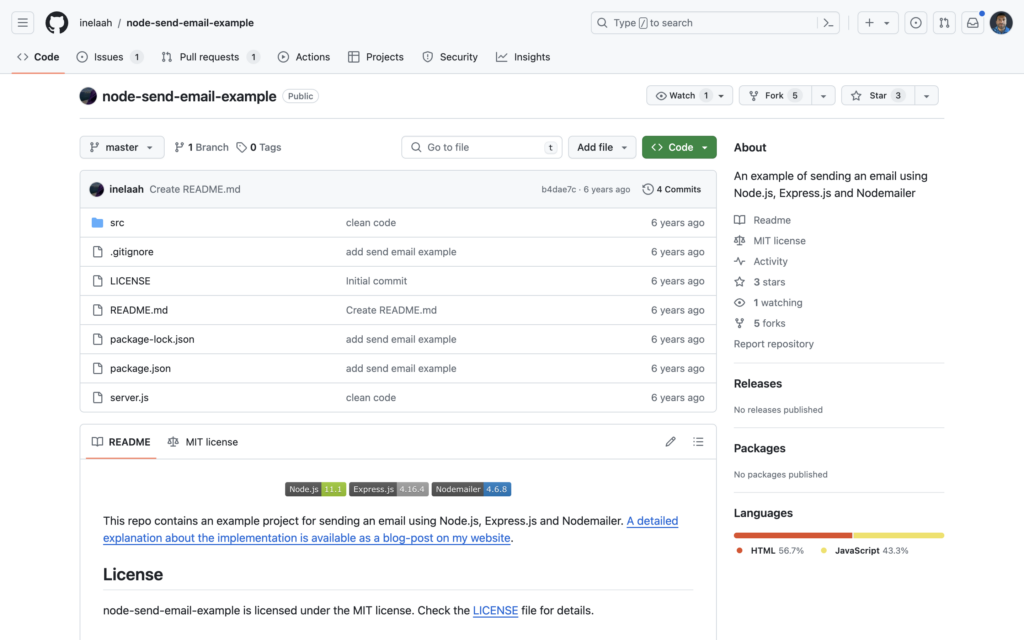

As you can see it pretty much walks you through the whole project creation process. Let’s go ahead and do our setup and install.

mkdir email_test

cd email_test

npm init -y

npm install nodemailer dotenv axios

The libraries it wants to use are pretty standard. (You could make an argument against axios but hey, let’s roll with it).

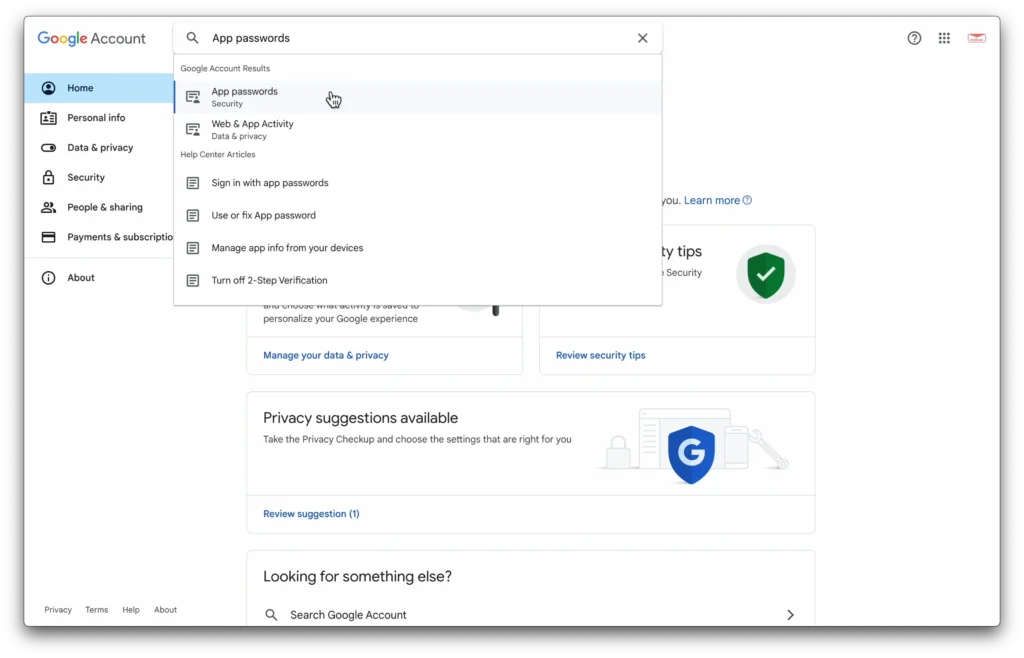

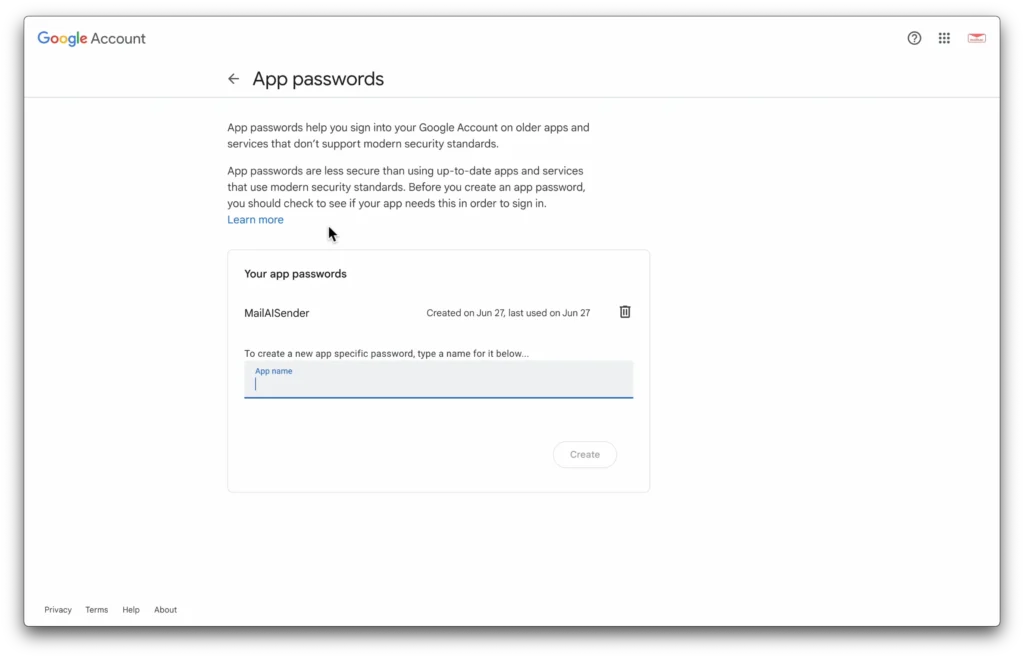

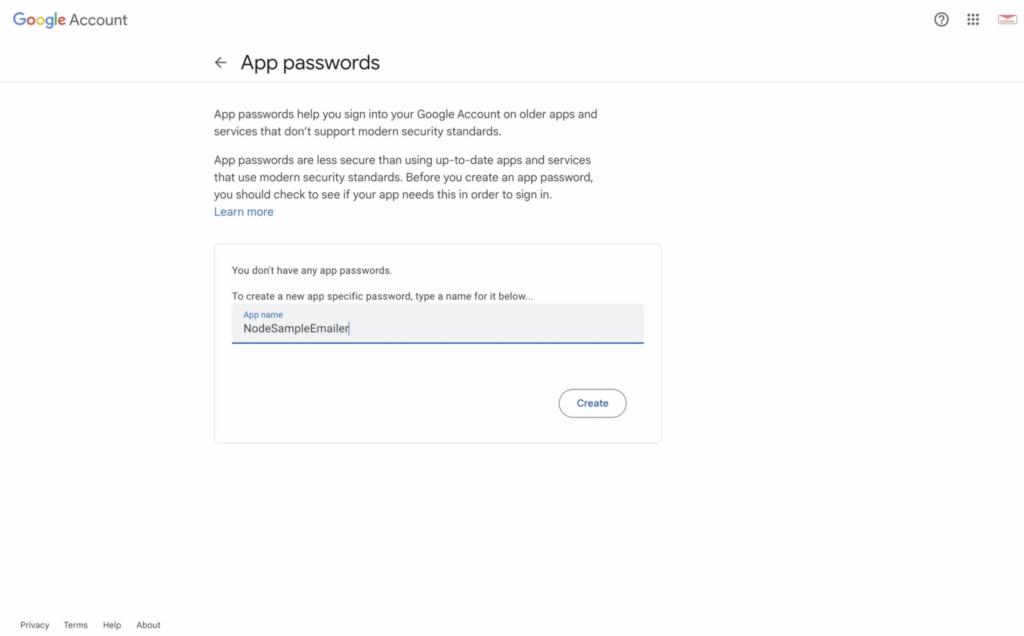

And now we’ll use a .env file to hold our API keys. You’ll need an application password for the google SMTP.

You can find where to add that in your Google account.

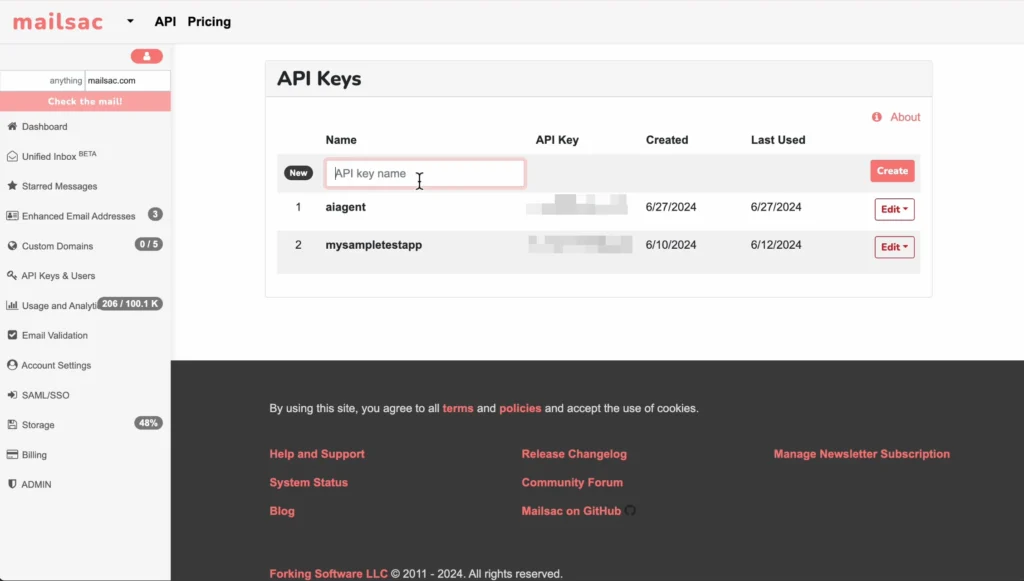

You’ll also need a mailsac key.

The .env file is now:

MAILSAC_API_KEY=your_mailsac_api_key

GOOGLE_EMAIL=your_google_email

GOOGLE_PASSWORD=your_google_email_password

Now copy all of your keys and email onto the .env file.

Let’s start working through the code itself

Looking through the code it looks fine, with 2 exceptions: It needs to be guided on the API endpoint (to use /api/text instead of /api/messages) and it the object retrived is the text itself, so we can remove the nonexistant child textBody

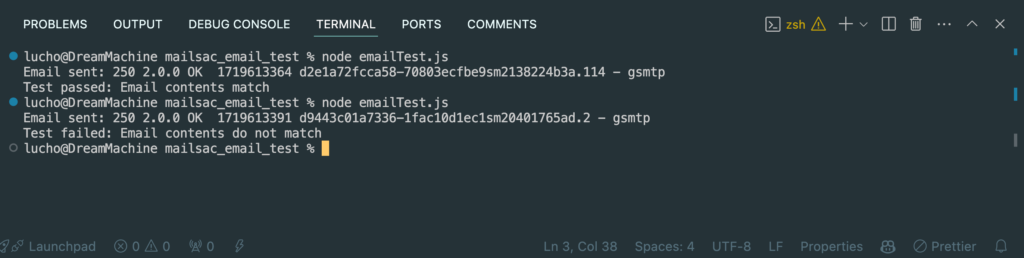

Now let’s go ahead and run the test.

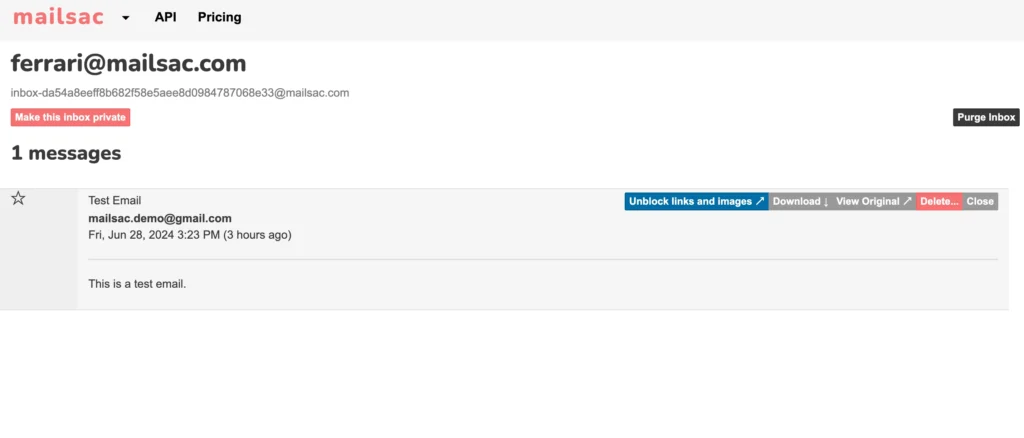

Check on mailsac to visually make sure an email was sent.

You can even make the test fail on purpose to ensure it’s working as intended.

It works!

From here it’s easy to incorporate these kinds of tests into specific continuous integration frameworks of your choice.

You could use cypress and work with existing libraries or an existing framework to run your tests.

Or you could use GitHub actions to fully run your now AI generated tests in the cloud. We have a guide showing you how to do just that.

As you can see it still took a human element to know what to want to test, frame it, and even tweak the AI’s output a bit to make sure that what it was generating made sense.

Tester’s aren’t going anywhere anytime soon, especially if you leverage AI to do the work for you while you focus on whats important to test and why.

Developing and testing an application is difficult enough without the stress of GDPR, CAN-SPAM, accidental information leak, etc. But it doesn’t have to be.

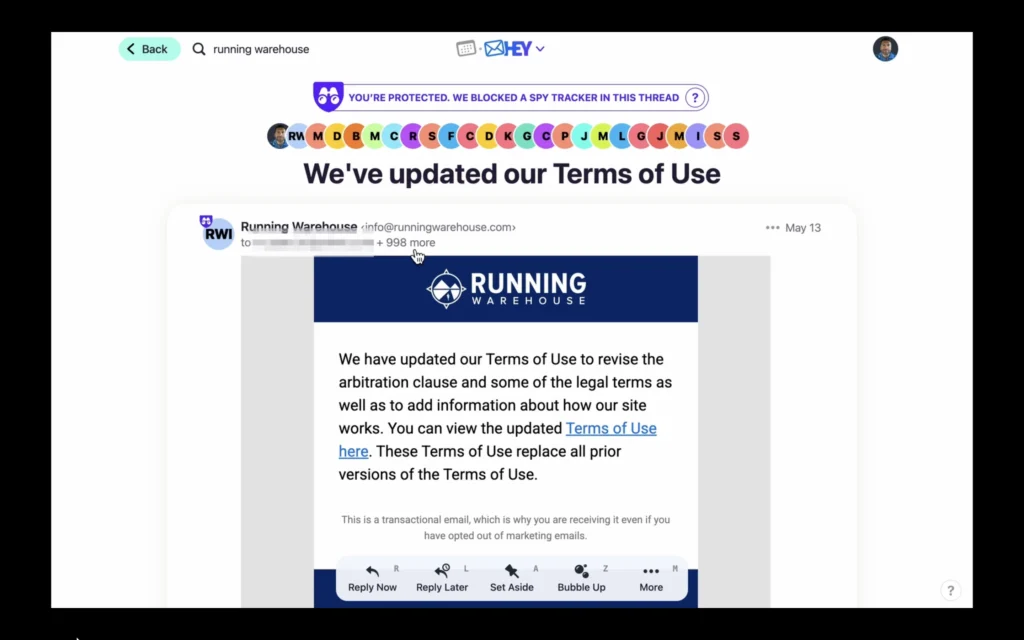

In this article I’ll walk you through how our email platform could’ve helped prevent Running Warehouse from exposing thousands of their customer’s emails.

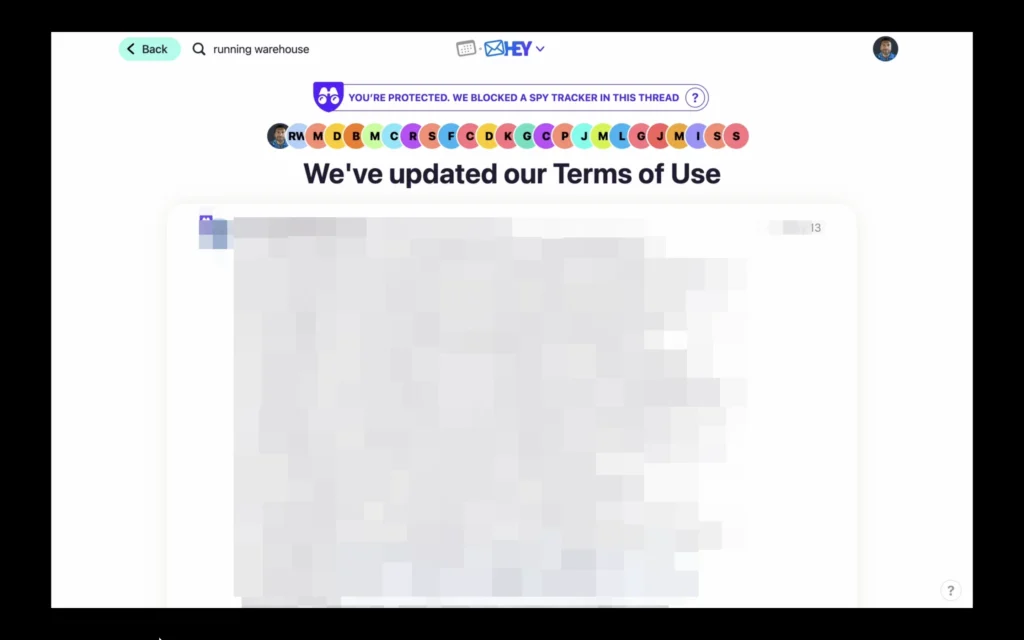

Now, this isn’t meant to poke fun at a company’s mistake but really to highlight just how easy these mistakes are to miss. Last month, I received two emails from “Running Warehouse” about an update to their terms of service. Nothing really unusual at first glance… Until you notice that about 1000 other customer’s email addresses had been sent along in the CC field. And to add even more insult to injury, they did this TWICE. So now I have a list of about 2000 of Running Warehouse’s customers that I’m sure the customers themselves are not happy about.

Really not a good look for a company who could be facing a class action lawsuit for a previous data breach back in 2021.

So let’s look at the implications of this easy to do mistake:

Obviously no company wants that! This is where mailsac steps in. We help prevent these kinds of easy mistakes, but at the application level. We have a set of features where you can hook up your continuous integration with our API to ensure the email you want to send is the one the recipient actually received.

So let’s walk through how we’d prevent this if this email was coming from our application.

Here’s what we’ll do:

Let’s get started.

Before we fire off an email, let’s add some quick code to check whether what we send is the same or not once it’s received.

npm install @mailsac/api)

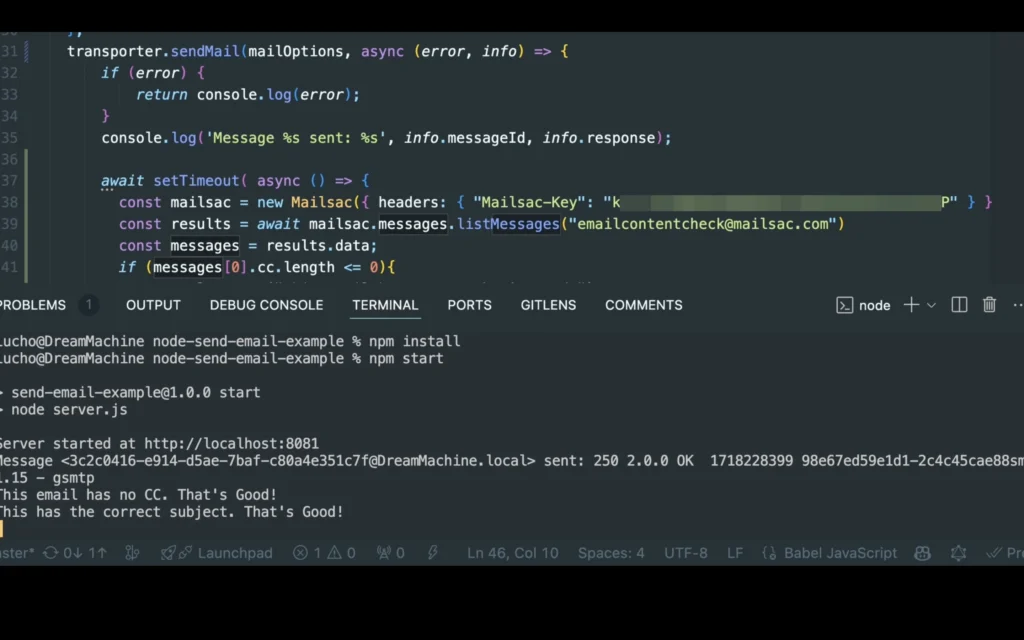

await setTimeout( async () => {

const mailsac = new Mailsac({ headers: { "Mailsac-Key": process.env.MAILSAC_API } })

const results = await mailsac.messages.listMessages("[email protected]")

const messages = results.data;

if (messages[0].cc.length <= 0){

console.error("This email has no CC. That's Good!")

}

if (messages[0].subject === req.body.subject){

console.error("This has the correct subject. That's Good!")

}

}, 1000);

Normally we’d place it in an .env file, but for our purposes here, we’ll just place it directly in the headers.

Alright now let’s fire this up and fire off an email

This email is intended only for emailcontentcheckHey, this email is strictly for [email protected]. No others on cc field.

Right after our code fires off, it should print out the message comparison result. But now, let’s make sure our CC fields are also empty

await setTimeout( async () => {

const mailsac = new Mailsac({ headers: { "Mailsac-Key": process.env.MAILSAC_KEY } })

const results = await mailsac.messages.listMessages("[email protected]")

const messages = results.data;

if (messages[0].cc.length <= 0){

console.error("This email has no CC. That's Good!")

}

if (messages[0].subject === req.body.subject){

console.error("This has the correct subject. That's Good!")

}

}, 1000);

We’re even throwing in a subject line check. You can check out the full API documentation to see how you can check for empty BCCs, content checks, and more.

Fire it off again and enjoy having an automated way test your email content, cc fields, and more!

Finding the right words to use in an email is already hard enough, don’t complicate it by worrying about whether the email went through, if it works right, if it accidentally cc’s people, etc.

Just let mailsac handle the double checking for you.

And if you want to dive even deeper into email testing, we have a full set of articles on integrating with Cypress or integrating other testing tools like Selenium with GitHub Actions for a fully automated testing pipeline.

Email is a crucial communication tool for businesses, even in 2024. It’s simple and effective. But in its simplicity we can find ourselves complacent in how we use it. Or even who we send our emails to.

In this article, we’ll examine five real-life case studies where emails went wrong and caused significant problems for the organization that sent it. From exposing private information to damaging a company’s reputation, these examples highlight the potential risks of email errors. By understanding these incidents, businesses can learn the importance of proper email management and take steps to prevent similar mistakes. These case studies serve as a reminder that attention to detail is essential in all aspects of communication.

In June 2021, HBO Max accidentally sent an “Integration Test Email #1” to a large number of its subscribers. As you can guess, the email was meant for internal testing but mistakenly went out to real customers. The incident caused widespread confusion and snark on social media. Some people pointed out the optics of mistakenly sending out an email to your entire client base, but most took the email fail in jest:

Hilariously, the official HBO Max twitter account had this explanation:

We don’t blame the intern, but we do cast some judgment on their complete lack of email service testing! Using disposable email addresses for internal testing could have prevented this. But hey, we did at least get some genuine entertainment.

Sadly this case is not as harmless. On May 2024 RunningWarehouse, a discount shoe seller, sent out what should have been a routine email about updating their terms of service.

As you can see, this wasn’t just sent to a single customer. While not the entire customer list, approximately 999 customers is pretty indicative of an email testing batching failure. And it didn’t go unnoticed either, as there was at least one 30 post thread on arguably the internet’s largest running forum.

The issue is still relatively fresh as of this writing, but it’s safe to assume that Running Warehouse did not intend to leak 1000 of its customer’s email addresses to each other due to someone mistaking CC vs BCC. And even more seriously, it could even be a violation of GDPR under “Sharing Email Addresses Without Consent” according to GDPR’s Data Breach Guidelines.

In January 2019, the University of South Florida St. Petersburg mistakenly sent acceptance emails to nearly 680 applicants, although only 250 had been accepted. As you can imagine this caused confusion, disappointment, and reputational damage to the university. The affected students and their families were left feeling misled, and the university had to invest considerable time and resources to address the fallout from the erroneous communication.

You may not be shocked to hear that this isn’t the first time this has happened, as Columbia University also accidentally sent 270 emails to students who had not been officially accepted.

These cases are a clear cut impact of email errors on institutional credibility and the importance of systematic verification before sending mass emails. Systems sending out emails absolutely need to ensure that the emails they intend to send are 100% accurate.

In September 2020, an employee at Australia’s Department of Foreign Affairs and Trade (DFAT) accidentally exposed the personal details of almost 3,000 citizens by not using the BCC field in an email. This revealed the email addresses of Australians stranded abroad due to COVID-19 travel restrictions. The mishandling of this sensitive information led to major privacy concerns and required immediate action, such as attempting to recall the email and asking recipients to delete it.

While not directly tied to a system, temporary email accounts could be used in place to ensure the intended audience and message gets to its audience intact.

In May 2020, Serco, a business services and outsourcing company, accidentally exposed the email addresses of nearly 300 newly recruited COVID-19 contact tracers by using the CC field instead of BCC. This breach of privacy led to concerns about the security of personal data and put the company’s data protection practices under scrutiny. Serco apologized and announced that they would review and update their procedures to prevent similar incidents in the future.

Yet another case of manual implementations of email lists without temporary email addresses, email outbound trapping services, or message integrity verification (ie, only 1 person in the to field, nothing in the cc field).

We offer several benefits that can help prevent incidents like the ones described above:

Disposable email services are an essential tool for modern communication. They can help prevent costly mistakes and ensure that PR issues around email remain in the past. For those looking to enhance their email security and efficiency, we’re an excellent choice. Give Mailsac a try today and see how it can benefit your email needs.

In healthcare, email communication intersects with patient care and data security. The margin for error is virtually nonexistent. Mailsac’s SaaS platform offers a robust email delivery suite, tailored to meet the rigorous demands of healthcare IT security, compliance, and operational efficiency. Mailsac has been integrated into systems at many enterprises in the healthcare industry, and has been relied on 24/7 for email testing for over a decade.

Security Audits: Mailsac is built to pass IT department security audits, aligning with healthcare standards like HIPAA. Its infrastructure ensures patient data is safeguarded during email testing.

Data Privacy: With disposable email addresses, Mailsac supports testing that avoids exposing real patient information, maintaining privacy and compliance.

Unified Inbox: Devs and QAs can collaborate effectively using Mailsac’s unified inbox feature, which consolidates test emails from both persistent and temporary email accounts into a single view.

SSO Integration: Simplify access while enhancing security with Single Sign-On (SSO), allowing seamless integration into existing healthcare IT ecosystems.

CI/CD Integration: Mailsac’s API automates email tests within CI/CD pipelines, reducing manual effort and accelerating development cycles in fast-paced healthcare settings.

Scalable Solutions: Whether scaling up operations or integrating new services, Mailsac’s scalable platform adapts to the evolving needs of healthcare enterprises, ensuring email testing is never a bottleneck. There’s no need to manage server resources, deployments or upgrades.

Device and Platform Coverage: Guarantee that critical communications are accessible across all devices and platforms used by healthcare professionals and patients alike.

Inbox Placement: Rigorous testing with Mailsac ensures healthcare emails achieve high deliverability, crucial for appointment reminders, test results, and other sensitive communications.

Email Optimization: Test and refine email content for clarity and engagement, ensuring messages to patients are both accessible and actionable.

For healthcare organizations, Mailsac offers a precision toolset for email testing — ensuring security, efficiency, and compliance while enhancing collaborative efforts between QA and development teams. Since 2012, Mailsac has practiced technical excellence and has helped hundreds of customers in healthcare manage their unique challenges.

Email testing is an essential step in the QA process, ensuring that communications reach their intended recipients accurately and efficiently. However, even experienced QA teams can fall into common traps that undermine their efforts. Here are ten frequent email testing pitfalls and strategic ways to avoid them, streamlining your workflow and enhancing email reliability.

Not testing how emails render on mobile devices, leading to formatting issues or poor user experiences.

Use email testing tools that simulate various mobile devices and screen sizes to ensure your emails look great everywhere.

Focusing on a single email client, ignoring the fact that your audience uses a wide range of email services with different rendering engines.

Test emails across multiple clients (like Gmail, Outlook, Yahoo) to identify and fix client-specific issues.

Assuming emails reach the inbox without verifying, risking them being flagged as spam.

Conduct deliverability tests with tools that provide insights into spam scores and help optimize for better inbox placement.

Creating content that’s confusing or misleading, leading to poor user engagement.

Ensure your messages are clear, concise, and actionable. Test variations to see which performs best in terms of user engagement.

Forgetting to make emails accessible to all users, including those with disabilities.

Include text alternatives for images, use sufficient contrast ratios, and test with screen readers to ensure accessibility.

Assuming all links and attachments work without thoroughly testing them, which could lead to a frustrating user experience.

Manually check each link and attachment in different environments to ensure functionality and security.

Overloading emails with high-resolution images or complex HTML, leading to slow loading times.

Optimize images and streamline code to improve load times, ensuring a smooth user experience.

Sending tests to outdated or incorrect email addresses, skewing testing results.

Regularly cleanse and validate your email lists to ensure accuracy and relevance.

Neglecting privacy laws and email regulations, risking legal issues and damaged reputation.

Stay informed about regulations like GDPR and CAN-SPAM, ensuring your email practices are compliant.

Performing repetitive tests manually, which is time-consuming and prone to human error.

Incorporate automated testing workflows to save time, reduce errors, and increase efficiency.

By being mindful of these common pitfalls and implementing the suggested solutions, QA teams can significantly improve their email testing processes. Tools like Mailsac offer zero-configuration custom private domains, comprehensive Swagger REST APIs, and a generous free tier, making it easier for teams to test emails effectively and efficiently. Remember, the goal is not just to send emails but to ensure they are delivered, readable, and engaging across all devices and clients.

These days, we have better options than writing our test specs by hand.

The open source community has released a variety of frameworks to relieve us from that particular tedium: Cypress, Selenium and Pupeteer. And in this video companion guide, I’ll focus on Playwright. Specifically, how playwright can help automate a lot of the boilerplate involved in writing test specs with CodePen.

The video will do a quick walk you through playwright in itself. This article will provide the core login.spec.ts file I used and where to go from next.

But before we do that, it’s worth mentioning that efficient testing isn’t just about the right tools; it’s also about the seamless integration of these tools into your existing systems. That’s where Mailsac comes in. Our platform offers unique capabilities for email testing within your automated workflows, making it an excellent complement to Playwright for end-to-end testing solutions.

With Mailsac, you can ensure not just the functionality but also the integrity of email interactions in your applications, all within the automation framework you’ll establish with Playwright.

Playwright is an open-source automation library created by Microsoft. It’s designed to enable developers and testers to write reliable and efficient tests for web applications.

It’s cross platform and officially compatible with the major browsers.

Start by installing playwright inside your project

npm init playwright@latest

I’ll be using the defaults for this guide. After installation you’ll have these files generated in your project:

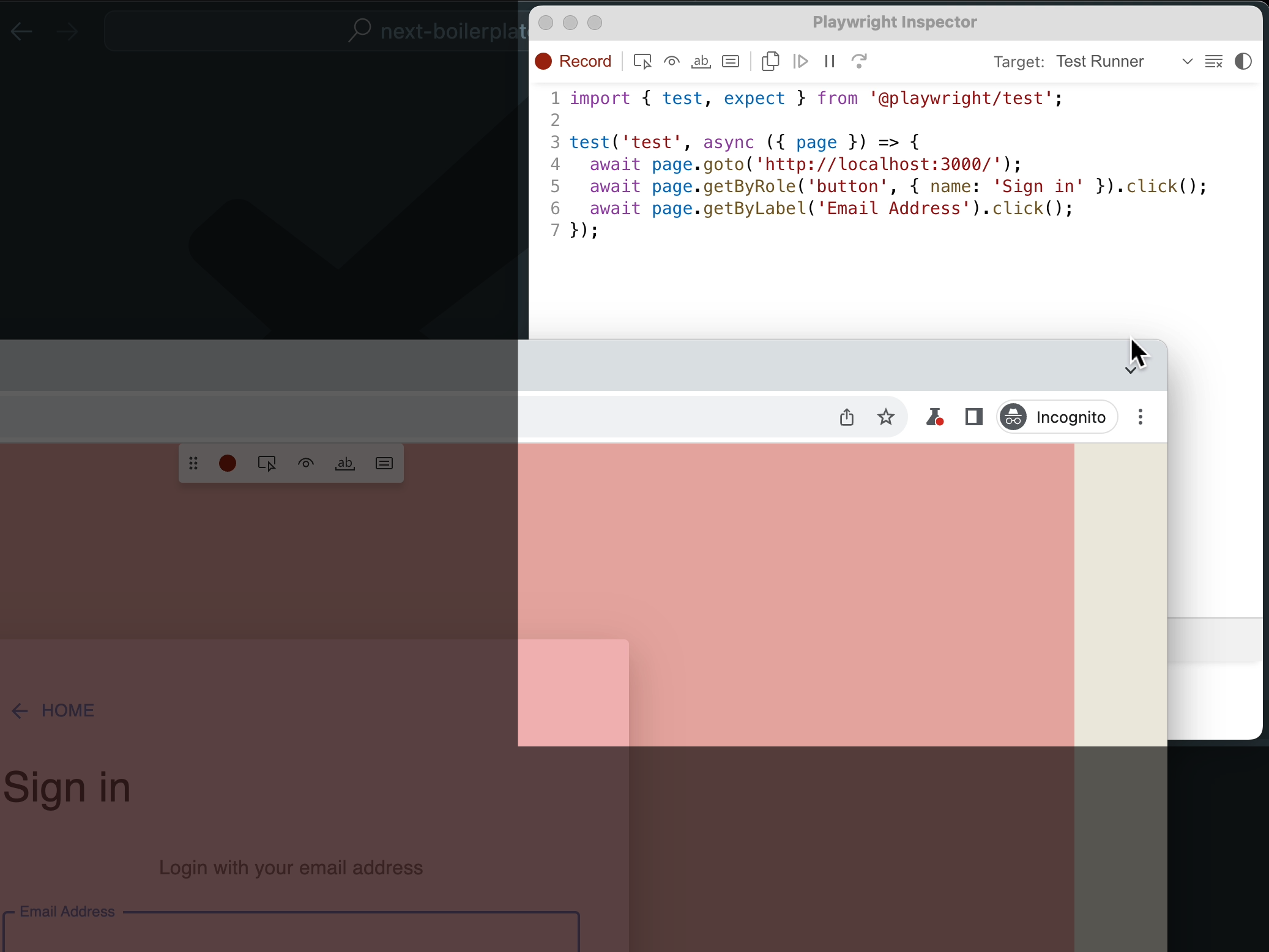

Fire up codegen via the built in console

npx playwright codegen

As you click around your application you’ll see codegen record each click based on its css class.

It will build each line as you go about your test. In our video, we perform a login with an incorrect set of credentials and a correct set.

login.spec.ts fileBefore our manual edits, this is what codegen generated for us:

import { test, expect } from '@playwright/test';

test('test', async ({ page }) => {

await page.goto('http://localhost:3000/');

await page.getByRole('button', { name: 'Sign in' }).click();

await page.getByLabel('Email Address').click();

await page.locator('form div').filter({ hasText: 'Email AddressEmail Address' }).getByRole('paragraph').click();

await page.getByLabel('Email Address').click();

await page.getByLabel('Email Address').fill('[email protected]');

await page.getByLabel('Email Address').press('Tab');

await page.getByLabel('Password').fill('password');

await page.getByLabel('Password').press('Enter');

await page.getByLabel('Email Address').click();

await page.getByLabel('Email Address').fill('[email protected]');

await page.getByLabel('Email Address').press('Tab');

await page.getByLabel('Password').fill('password123');

await page.getByLabel('Password').press('Enter');

await page.getByRole('button', { name: 'Settings' }).click();

await page.getByText('Note: Your email mailsac.demo').click({

button: 'middle'

});

await page.getByRole('button', { name: 'Next boilerplate' }).click();

});

All we had to do was manually add was the highlighted lines (13 and 19) to turn it into a real test:

import { test, expect } from '@playwright/test';

test('test', async ({ page }) => {

await page.goto('http://localhost:3000/');

await page.getByRole('button', { name: 'Sign in' }).click();

await page.getByLabel('Email Address').click();

await page.locator('form div').filter({ hasText: 'Email AddressEmail Address' }).getByRole('paragraph').click();

await page.getByLabel('Email Address').click();

await page.getByLabel('Email Address').fill('[email protected]');

await page.getByLabel('Email Address').press('Tab');

await page.getByLabel('Password').fill('password');

await page.getByLabel('Password').press('Enter');

await expect(page).toHaveURL('http://localhost:3000/login');

await page.getByLabel('Email Address').click();

await page.getByLabel('Email Address').fill('[email protected]');

await page.getByLabel('Email Address').press('Tab');

await page.getByLabel('Password').fill('password123');

await page.getByLabel('Password').press('Enter');

await expect(page).toHaveURL('http://localhost:3000/home');

await page.getByRole('button', { name: 'Settings' }).click();

await page.getByText('Note: Your email mailsac.demo').click({

button: 'middle'

});

await page.getByRole('button', { name: 'Next boilerplate' }).click();

});

Running the test by default shows no visual progress. But if you’d like to see the browser run through your steps visually, you’ll need to issue the command:

npx playwright test --headed

The most natural next step is integrating playwright tests with a continuous integration platform like Travis or Github Actions. Plugging playwright into a CI system like Github Actions is fully supprted by playwright natively.

Another possible progression is using playwright to test critical paths in your application like user registration or password reset flows. We have a full guide on how to do that with another framework, Cypress.

If you want us to explore how you can integrate playwright with email testing and Github Actions or any other potential playwright integrations, let us know on our forums. We’ve only scratched the very surface of what playwright can do.

Until next time.